What is AI Engineering?

Unpacking the mindset and methods of AI Engineering.

Artificial Intelligence (AI) is everywhere now. But just a few years ago, building intelligent software meant months of data preparation, model training, and complex infrastructure. It felt like something only research labs or tech giants could afford.

That’s no longer true.

With just a few lines of code, you can plug into some of the most powerful AI models ever created. These models are your building blocks, they’re like LEGO bricks. You don’t need to shape each brick yourself. Just imagine what to build, put pieces together, and bring your ideas to life.

This is AI Engineering. It is not about creating models from scratch, but about turning powerful models into useful products. You focus on the design, function, and impact.

No PhD required. No need to be a machine learning expert. The tools are accessible, and the opportunity is enormous. Today, everyone can start building AI applications.

So whether you're an experienced software engineer or a curious builder, this newsletter will help you to bridge theory and practice. You’ll learn the key ideas behind modern AI systems, and how to apply them to build real-world products.

Welcome to AI Engineering Unpacked.

From ML Engineering to AI Engineering

AI applications aren’t new. Translation apps, camera autofocus, spam filters, these have all used AI for years. But building them used to be slow and expensive. Teams of ML researchers and engineers had to curate labeled data, design and train models, and deploy custom infrastructure. It could take months to ship even a basic product.

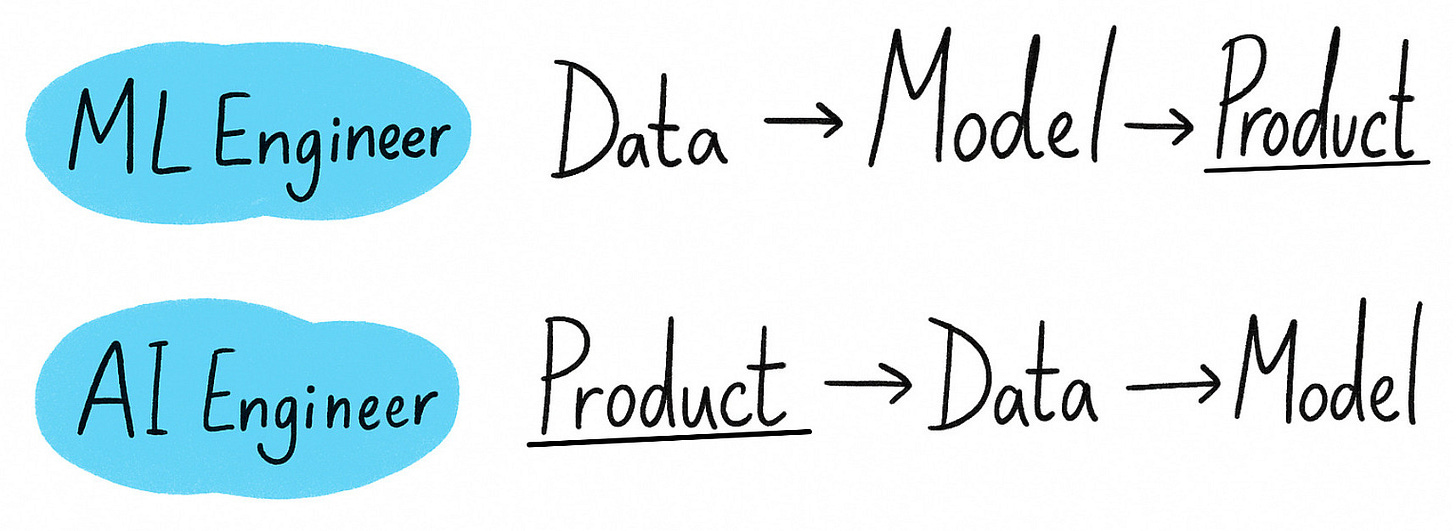

That was classical ML engineering: start with data, build a model, then wrap it in an application.

Today, that process has flipped.

With Large Language Models (LLMs) at your fingertips, you can build a translation app or a chatbot in a single evening. AI engineers no longer begin with data pipelines or model training. They start with the problem, design the user experience, and plug in powerful models to solve it. Only then do they customize, optimize, or fine-tune if needed.

This shift is changing what the role looks like. AI engineering blends software development, systems thinking, and human-centered design. It's less about training models and more about integrating intelligence into products. Pre-trained models are core components now, and AI engineering techniques are becoming standard tools.

The job now looks a lot like full-stack engineering, with a deep understanding of how large language models work under the hood.

From my own experience as Head of AI, this has changed how I hire. I don’t just look for ML expertise, I look for software engineering skills as well. It’s not just about knowing the models, it’s about knowing how to ship great products. That blend is what makes someone a great AI engineer.

That’s the essence of AI engineering: fast iteration, user focus, and turning cutting-edge models into real-world impact. You don’t need to wait to get started. The tools are here. And you can learn by building. Today.

What has changed?

What made this leap possible is a convergence of key advancements:

Scalable training methods: especially through self-supervised learning, which unlocked ways to train models without labeled data.

Smarter architectures: like transformers, which enabled generalization across different tasks.

Advances in hardware and distributed training: which made it feasible to train enormous models on vast datasets and run large-scale experiments.

These breakthroughs led to models that learned broad patterns across language, code, and images. Scaling laws taught us that bigger models, given the right ingredients, get dramatically better. Suddenly, one model could answer questions, write code, summarize documents, and carry on a conversation.

But what changed everything wasn’t just that models got better, it’s that they became accessible.

Model-as-a-service flipped the AI equation. Now you can compose, customize, and deploy intelligent systems without ever developing a model. This lowered the barrier to entry, redefined who can build with AI, and what gets built.

AI isn’t just a research project anymore, it’s a software primitive. What used to be a machine learning challenge is now a software engineering opportunity.

The result? An explosion of AI-native products:

Developers are shipping AI features in days and startups are launching products that would’ve taken years to build from scratch!

Entire workflows are being rebuilt around intelligent systems.

And the potential is massive. It’s already reshaping how we work, learn, and create. This shift isn’t just technological; it's economic. PwC predicts AI could contribute up to $15.7 trillion to the global economy by 2030, with more than half of that driven by productivity gains.

Core Techniques

Let’s say your company wants to build a customer support chatbot. One that can answer user questions, handle orders, and maybe even process refunds. The AI engineer’s first job isn’t to dive into code, but to deeply understand the use case. What should the assistant know? How should it behave? What actions should it take? Most importantly: what does success look like, and how it will be measured?

Only then will the AI engineer begin customizing the model for the specific task.

1. Guide with Prompts

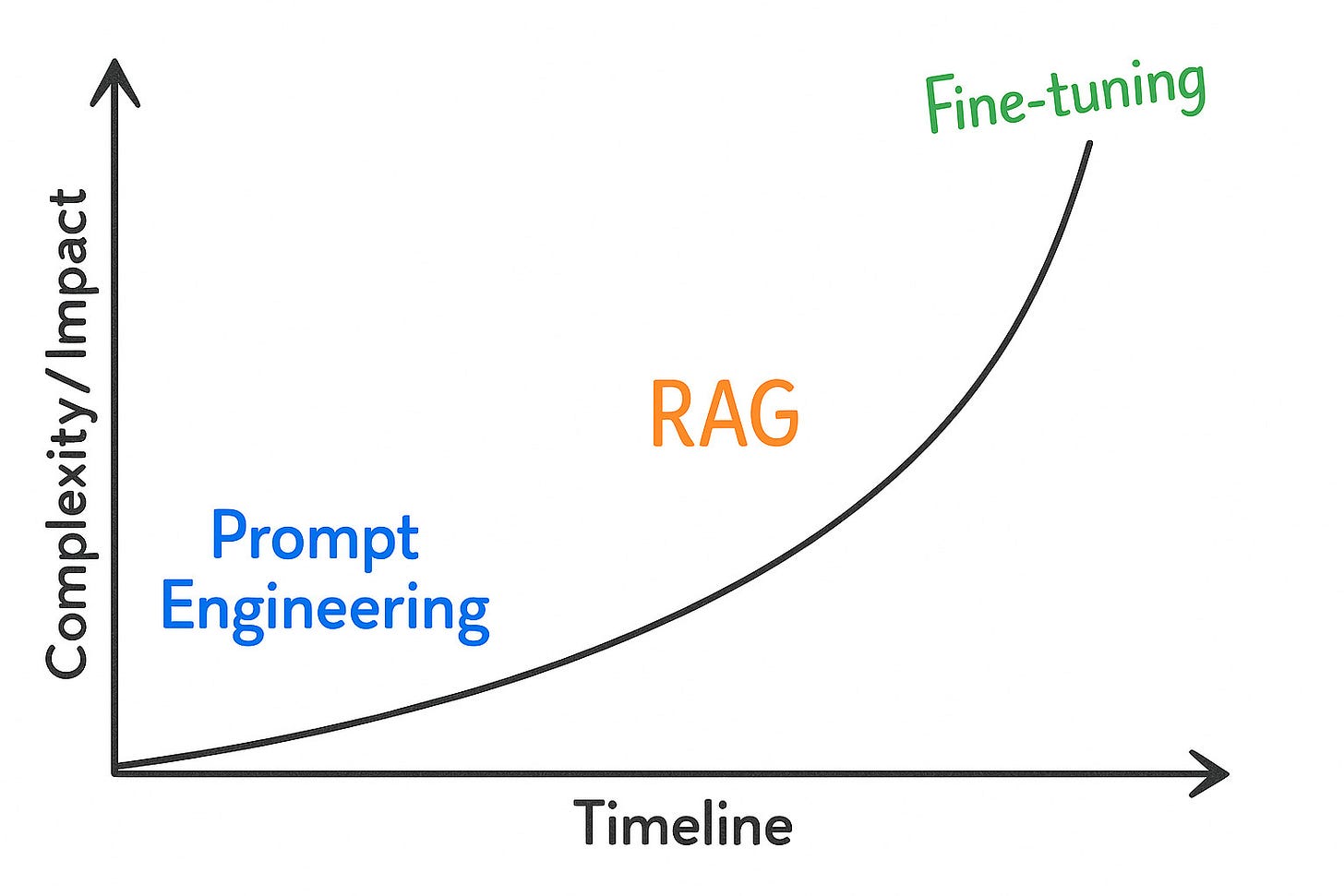

The first step is prompt engineering. This means crafting natural language instructions that guide the model’s behavior. You can define the assistant’s role, set its tone, and provide examples or constraints. When crafted effectively, prompts can deliver surprisingly strong results with minimal effort. You can learn more about prompt engineering in this issue.

2. Bring in Knowledge

But prompts have limits. If the model struggles to answer specific questions, such as details about your company’s return policy, you need to give it access to external knowledge. This is where retrieval-augmented generation (RAG) comes in. Instead of packing all relevant info into a prompt, RAG pulls the right data on demand and feeds it to the model as context. This improves accuracy and expands the model’s knowledge without retraining it.

3. Change the behavior

If that still isn’t enough, and the model needs to follow more specific behavior or tone, fine-tuning may be the next step. This involves adapting a model on your own data to consistently adjust its outputs. Fine-tuning is more expensive and complex, so it is used only when necessary.

4. Add Autonomy

For even more advanced tasks, where the assistant needs to reason, plan, or carry out multi-step actions, such as verifying identity, checking inventory, and issuing a refund, you might explore agentic patterns. These systems treat the model as a reasoning engine, wrapped in tools, memory, and logic to act more autonomously. AI agents are promising, but still an area of active exploration in AI engineering.

Together, these techniques form the core toolkit of AI engineers. Knowing when and how to use them is key to building reliable, intelligent applications.

“While fancy new frameworks and fine-tuning can be useful for many projects, they shouldn’t be your first course of action.” - Chip Huyen

Challenges

One of the core challenges in AI engineering is evaluation. Many tasks are open-ended, with no single correct answer, making it hard to measure progress or define success. Even for summarization is subjective. Let alone question answering or agent-based reasoning. Standard benchmarks often fall short, so teams rely on custom metrics, test suites, and real-time user feedback to track performance over time.

Another big challenge is latency and cost. LLMs are both computationally intensive and expensive to run. Even simple queries can take several seconds and require substantial compute resources. Tasks that require multi-step reasoning, such as planning or tool use, make both latency and cost worse. In user-facing applications, this kind of latency breaks the experience. No matter how impressive the output, if it takes too long, people won’t wait. Optimizing for speed while maintaining reliability and quality is a major ongoing challenge.

“Sometimes latency may be even more important than intelligence” - Lex Fridman

Reliability is equally difficult. These models are inherently unpredictable. A small change in input can lead to drastically different output, and the same prompt might not return the same result twice. This non-determinism makes debugging feel more like investigation than engineering. Guardrails and filters can improve behavior, but each layer adds complexity, introduces new failure points, and adds latency.

Building a prototype with generative AI is fast, turning it into a production-ready system is a different challenge entirely. What I’ve learned through building these systems is to start simple, ship quickly, and add complexity only when there is a clear reason to do so. In AI engineering, that discipline is necessary.

Your Jump-Start Plan

I believe everyone can become an AI Engineer and the best way to learn is by building.

If you've never worked with large language models before, now is the perfect time to start. You don’t need to understand all the internals. Just pick a simple idea and experiment.

Here’s a quick jump-start plan:

1. Brainstorm an idea

Think of a small, valuable use case. A great starting point is a task you do often, or a workflow you could automate.

2. Break it down

Take your idea and divide it into smaller steps. This helps you understand where LLMs can help.

3. Build using an LLM API

Use a foundation model like Gemini to start prototyping. Google’s Gemini API has a generous free tier, so you can get started without spending anything. Just go to their website, create an API key, and start building!

Here is an example to prompt a powerful model using just a few lines of Python code:

To help you get started, I’ve created a simple example that walks you through building an AI Learning Coach chatbot. It’s a real-world use case that demonstrates how integrate an LLM into your application through API and use basic techniques like prompt engineering and routing.

Don’t aim for perfection. Start exploring, building, and learning.