Large Language Models Explained

Learn how LLMs think (and how to think about them)

Intro

Large Language Models (LLMs) are arguably the most powerful AI models we have today. They power applications like ChatGPT, and can write poems, answer questions, draft legal documents, and even generate code. With billions of “neurons” that were trained on the entire internet, LLMs can understand and generate human language.

What’s even more impressive: they generalize across a wide range of tasks, often without needing any additional training. That’s why, for many applications, you no longer need to build your own AI model from scratch - you can just plug into one. This shift in how we build with AI is at the heart of AI Engineering, which I introduced in the first issue of this series.

Today, almost anyone can use ChatGPT to learn faster, get work done, or experiment creatively. But while LLMs are everywhere - and CEOs can’t stop talking about them - very few people actually understand how they work under the hood.

If you’re an engineer building with these models, this understanding isn’t optional. It’s what lets you use LLMs effectively, debug weird outputs, and design systems that go beyond prompting.

This is essential not only for developers but for everyday users, so they can better understand the flaws and use these models more efficiently.

💡 In This Issue

In this issue, we’ll build a strong mental model for how Large Language Models actually work. You’ll learn how these models evolved, what they’re really doing when they generate text, and how to work with them effectively.

While we’ll touch on some technical aspects, the focus here is clarity — not complexity. We’ll leave the deep dives (like how attention works) for future issues. Today is all about getting the right mental model.

LLM is “Just” Next-Word Predictor

Ever typed a sentence and watched your phone suggest the next word? Now imagine that - scaled to billions of parameters and trained on most of the internet.

That’s a large language model.

LLMs create responses word by word based on user input.. They are basically predicting the next word but in ways that appear intelligent to humans.

But language modeling isn’t new.

The task of predicting the next word or sequence of words, has evolved over decades from early rule-based systems that were rigid and limited, to statistical n-gram models that introduced probabilities but struggled with longer context, and finally to neural networks like RNNs and LSTMs in the 2010s, which improved performance using deep learning but still faced challenges with long-range dependencies.

Then came a breakthrough.

Transformers Changed Everything

In 2017, Google researchers proposed the new neural network architecture - Transformer in the now-famous paper “Attention is All You Need”.

It introduced self-attention mechanism, allowing models to understand language much better, especially longer sequences.

This also made training vastly more parallelizable - a perfect match for modern compute infrastructure.

Transformers became the foundation of models like BERT, GPT, and LLaMA. Today, nearly every state-of-the-art NLP model uses this architecture.

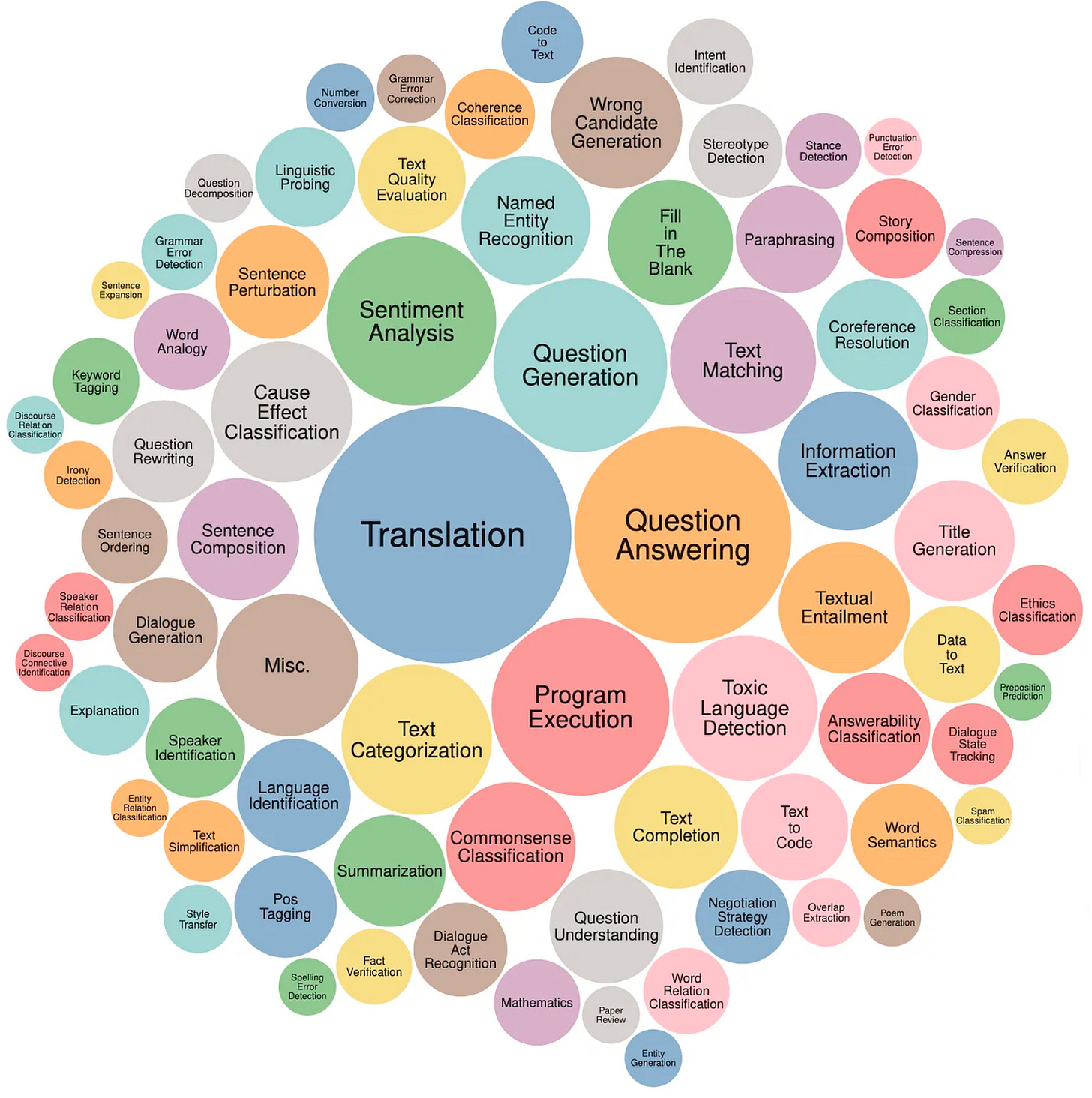

Transformers can be adapted to different tasks:

Encoders (e.g. BERT) for classification and entity recognition.

Decoders (e.g. GPT) for text generation.

Encoder-decoder models (e.g. T5) for translation, summarization, and question answering. Though today, many of these tasks are handled by decoder-only models.

In this issue, we focus on decoder-only architecture like ChatGPT - the ones that generate language, word by word, to simulate conversation, write code, solve problems, and more.

Emergent capabilities

Even though LLMs are trained just to predict the next word, they can end up doing things that look surprisingly smart.

🧠 Mimicked Reasoning

By generating text one word at a time, they can follow step-by-step reasoning, like solving a math problem or explaining a concept. This “thinking out loud” often leads to better answers, simply by writing down each small step.

🛠 Tool Use

The same word-by-word generation also enables tool use. For example, if connected to a calculator or a search engine, a model can write something like calculate(2 + 2) or search("weather in Paris") and the system will recognize that as a tool call. The model doesn't need to know what a calculator is; it just learns to write the right words to get the job done.

🤖 Agentic Behavior

With the right setup, LLMs can also carry out multi-step tasks—deciding what to do next, using tools, checking results, and continuing—all just by continuing a text. This kind of structured problem-solving is called an agentic workflow, and it’s powered entirely by next-word prediction.

So, these "probabilistic parrots" display surprisingly sophisticated behaviors. Their simple objective, when scaled and trained on diverse data, gives rise to previously unseen capabilities.

Training

Training a neural network involves adjusting its internal parameters, so that its behavior begins to mirror human-like understanding. By showing it tons of examples of input-output pairs, the model starts to uncover patterns in language and uses these to make smart predictions on new, unseen text. For LLMs, this learning happens in two major stages: pre-training and post-training.

Note: LLMs are not operating on raw text. Instead, they operate on tokens. You can think about them as words (e.g. “learn“) or subwords (e.g. “ed”, “ing“).

Pre-training

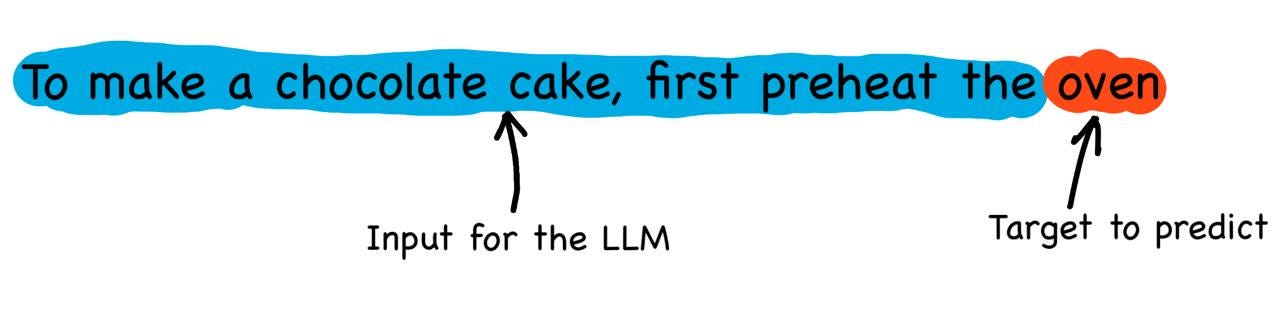

The first and most computationally intensive phase is called pre-training. Here, the model is exposed to vast amounts of raw text from books, articles, websites, forums, and other public sources. It learns by predicting the next token in a sentence, like completing:

“To make a chocolate cake, first preheat the ...” → “oven”.

This simple game of next-token prediction turns out to be surprisingly powerful. It enables the model to learn grammar, facts about the world, reasoning patterns, and even some basic common sense, all without explicit human supervision. This is why it’s called self-supervised learning: the supervision signal (what the "correct" answer is) comes from the data itself.

Data Collection

LLMs are trained on enormous amounts of text that is far more what any human could absorb. Meta’s LLaMA 3, for example, was trained on 15 trillion tokens, more than a person might read in a lifetime.

They learn not through deep experience, but massive breadth.

To reach this scale, developers crawl the web and license large datasets. Common sources include Common Crawl, which scrapes billions of web pages regularly. The data then passes through filters to improve quality and reduce harm. The filtered open dataset is FineWeb with 15 trillions tokens.

The data collection process remains controversial: many documents are scraped without permission, raising legal and ethical concerns.

Objective: Autoregressive Language Modeling

Most modern LLMs are trained as autoregressive language models. That means they take a sequence of tokens (e.g., words or subwords) and learn to predict the next token, one step at a time.

The training dataset is split into chunks of different size, these chunks are then used to train the model. At each step, the model sees all the previous tokens and generates a probability distribution over what word should come next. It is simply trained to memorize what usually comes next in human language, not to understand explicitly. But as it ingests more data, those patterns begin to encode complex ideas and knowledge structures.

Infrastructure & Scaling Laws

Training these models requires enormous compute infrastructure. Clusters of specialized GPUs are used to train a model in parallel over weeks or months.

Why train such large models? Because scaling laws show that performance continues to improve as we scale up size of the model and training data. And this in turn requires more compute.

Post-training

At the end of pre-training, we have a base model: a powerful, general-purpose text generator that’s read a large fraction of the internet. But it’s not yet an assistant. If you ask it:

“What’s your name?”It might respond with:

“What’s your surname?”because that phrase often follows in web forms the model saw during training.

Even worse, it may reproduce offensive or harmful language seen during training. That’s why base models are typically not exposed directly to users.

To make the model more helpful and harmless, we run it through post-training.

Post-training turns raw linguistic intelligence into trustworthy interaction.

Pre-training unlocks capability. Alignment unlocks usability.

1. Instruction Fine-Tuning

The first step in post-training is supervised fine-tuning (SFT), also called instruction tuning. Here, the model is shown curated examples of how it should behave in assistant-like conversations:

User: What’s your name?

Assistant: My name is ChatGPT, a language model developed by OpenAI.This includes both synthetic conversations and manually written examples by human experts. The learning objective is the same as pre-training—predict the next token—but now the training examples are dialog turns, not internet text.

SFT teaches the model how to:

Follow instructions

Be polite and informative

Refuse unsafe or inappropriate requests

It’s how the model begins to simulate helpful behavior.

2. Reinforcement Learning / Preference Optimization

Instruction fine-tuning gets you a competent assistant, but it still imitates human-written answers without deeper judgment. To take it further, we apply reinforcement learning (RL).

There are two major goals here. First - preference alignment - teaches the model to oroduce responses that humans prefer. Second - reasoning emergence - encourages the model to discover and use multi-step reasoning strategies.

Reinforcement Learning from Human Feedback (RLHF)

The core of RLHF is to generate several candidate answers to each prompt and have humans rank them, so the best answer gets the highest score. These rankings are used to train a reward model that estimates how much a human would prefer each response. Next, the language model (policy) is fine-tuned using reinforcement learning (often PPO) to maximize the reward signals. This process allows the model to explore new outputs that go beyond simply imitating training examples, learning to produce responses that align more closely with human preferences.

Note: Some preference alignment methods, like DPO and SimPO, were inspired by RLHF but do not use reinforcement learning. They simplify the process and have been shown to perform as well or better than RLHF in many tasks.

RL Unlocks Reasoning

Perhaps the most exciting result of post-training is that reasoning emerges.

RL-tuned models (e.g., GPT-o1, DeepSeek R1) don’t just answer questions—they think through them:

Break down problems into steps

Double-check answers

Try alternative approaches

These reasoning patterns weren’t necessarily present in the training data, the models discover them through these RL methods.

What Happens Inside the Model? (4 Core Steps)

Now that the model was trained, let’s explore how an LLM like ChatGPT works under the hood when you interact with it.

Let’s say you have a torn recipe that looks like:

“To make a chocolate cake, first preheat the…”

You would easily guess that “oven” is the next word. Let’s explore how an LLM arrives at this prediction in four key steps.

Tokenization: Translating Words Into Numbers

Tokens are the true “atoms” of LLMs.

Everything an LLM does, whether it’s generating fluent text or hallucinating facts, emerges from how it processes tokens. In fact, poor tokenization often hurts performance more than having fewer parameters.

But what exactly are tokens, and why do we need them?

To work with text, models need to convert it into numbers. The most naïve approach is to treat each character as a token and assign it a number. But this leads to two big problems:

Sequences become extremely long, which slows everything down—training, inference, memory use.

Patterns become harder to learn. At the character level, meaningful structures are broken into tiny pieces. That makes it much harder for the model to learn how language actually works.

Think about it: when you write a sentence, you don’t think one letter at a time—you think in words or phrases.

The next idea might be: just assign an ID to every word. That seems more natural, but it creates new issues:

Rare words are a problem. If a word barely appears in the training data, the model won’t learn much about it.

Misspellings, slang, and new words break the system. With a pure word-level approach, the model has no way to handle something it hasn’t seen before.

The Subword Solution

So we split the difference. Instead of characters or full words, tokenizers break text into subword units—smaller chunks that balance vocabulary size with expressive power.

Take the word “preheat.” It splits into two tokens: "pre" and "heat". This allows the model to:

Learn meanings more efficiently by sharing representations across related words (e.g., “heat,” “heatting,” “preheat”).

Understand rare or unseen words by recombining known pieces.

Asking an LLM to count how many letters are in a word often fails, because it never sees letters. It sees tokens, which might represent whole words or subwords.

Tokenizers are vocabularies that translate text into numbers (and back), we will explore how they are created in separate issues. For now it’s important to understand how text is converted to tokens during this first step.

You can play with tokenizers and explore how different models “see” the input text using tiktokenizer.

Tokenization isn’t just a preprocessing detail - it shapes how the entire model understands language.

Embedding: Understanding words’ meaning

After text is tokenized, the first step in an LLM is to convert each token into a dense vector known as an embedding. These vectors live in a high-dimensional space (often with hundreds or even thousands of dimensions) where tokens with related meanings are positioned close together. For example, “cake” near “pastry” or “chocolate” near “vanilla.” This mapping is done through a learned embedding table, which assigns each token an initial vector based on patterns seen during pre-training. At this stage, embeddings are static: they don’t yet account for context.

Still, even these initial embeddings encode rich semantic structure. They allow the model to compare meanings, detect similarities, and perform simple conceptual arithmetic, like subtracting “man” from “king” and adding “woman” to get something close to “queen.” Embeddings also play a central role in external tasks like retrieval or search, where specialized embedding models are trained to produce vector representations of entire passages or queries.

Attention: Understanding the context

Once tokens are embedded, the model needs more than just their meanings—it also needs to understand their order. Unlike humans, it has no built-in sense of sequence, so positional information is added to the token vectors. This helps the model distinguish between phrases like “first preheat the” and “preheat the first.”

With position and meaning combined, the model begins its core task: connecting the dots through attention. Attention layers allow the model to look at all other words in the sentence and decide which ones matter most.

Here’s how: each word creates a query, and compares it to keys from all the other words to see which ones are most relevant. If a match is strong (like between the query “cake” and the key “chocolate”) the model pays more attention to that connection. The actual content that gets passed along is stored in values, which are blended based on how strong each match is.

So when it encounters “chocolate cake,” attention strengthens the link between the two, refining the meaning of “cake” into something more specific.

This process repeats across many layers. Each one applies attention to capture relationships, followed by a feed-forward network that transforms the results. With every pass, the model deepens its understanding by layering new patterns onto old ones.

By the final layer, “cake” isn’t just a baked good, it’s a chocolate cake being prepared in an oven. The meaning has evolved through a sequence of updates shaped by the entire sentence.

This ability to build meaning through understanding connections is what gives LLMs their power.

Sampling: Choosing the next word

Now that the model understands we’re talking about a chocolate cake, it’s ready to predict what comes next. After passing through all layers, final representation is used to compute a score (logit) for every word in the vocabulary. These scores are turned into probabilities using a softmax function.

For example:

Oven – 90%

Microwave – 5%

Pan – 3%

Other – 2% Here, “oven” clearly stands out as the most likely next word. Instead of always picking the top one, we sample. It’s like rolling weighted dice, where higher-probability tokens are more likely to be chosen.

This sampling step is what gives LLMs their creativity and diversity. Without it, outputs would be repetitive and dull. Every recipe would look the same.

There are different sampling strategies that allow you to steer the model’s output toward different goals: more creative, more predictable, more diverse, or more structured. There is another issue that explains sampling in LLMs in detail.

This entire process of understanding, prediction, and sampling, continues until the recipe is complete or reaches a natural stopping point, such as reached limit of max tokens or generated end-of-sequence special token.

Think of the whole process like a super-advanced version of completing a sentence, where each word choice is informed by understanding the meaning of all previous words and their relationships to each other. The model does this by converting words to numbers, understanding their basic meanings, analyzing their relationships, making informed predictions, and building the response one word at a time.

Limitations & Mitigations

Despite their impressive capabilities, LLMs are not magic. A useful way to think about their limits is via the “Swiss cheese” model, formulated by Andrej Karpathy:

LLMs are solid and capable overall, but full of unpredictable holes.

You can get fluent, intelligent output one moment and nonsense the next. Understanding these limitations helps avoid mistakes and gives you ways to prompt more effectively.

Hallucinations and Knowledge Cutoff

LLMs like ChatGPT are trained to be helpful assistants that always try to answer your questions. That’s why, even when they don’t know something, they might still respond politely and confidently, and sometimes incorrectly. This is called hallucination.

Note: Common knowledge is reinforced by frequent patterns in the training data, but rare or obscure facts are less reliably encoded and more prone to errors.

Mitigation

Give the model enough context, or

Use search tools if possible.

For mission-critical use, validate output with other systems.

Math and Spelling

LLMs struggle with precise tasks like counting or character indexing because:

They operate on tokens, not characters.

For example, “berry” might be a single token, so the model doesn’t "see" individual letters and thus doesn’t know how many “r”s in “strawberry”.

Arithmetic is not performed symbolically but learned statistically.

As we've discussed, the model works by predicting the most likely next token based on patterns in its training data. That’s why, for complex or uncommon equations, it may generate answers that sound plausible, but are actually incorrect.

Mitigation

Let the model use tool such as code.

In that way, instead of performing calculations by predicting next tokens, it will write a piece of code that will do this programmatically and then give you an answer.

Limited Context Window

LLMs have a limited memory: they can only process a certain number of tokens at a time. This limit is known as the context window. It is the maximum number of tokens the model can “see” and use to generate a response.

For example, GPT-4 can handle up to 128,000 tokens, which covers hundreds of pages of text. But anything beyond that is invisible to the model. It doesn’t remember earlier parts unless they fall within the current window.

Even within the token limit, performance can degrade as the input gets longer. Models tend to focus more on the most recent tokens and may overlook important details in the middle. So while longer context windows are useful, they come with trade-offs in accuracy, speed, and cost.

Mitigation

Restart conversations when they get too long.

Repeat or summarize key information periodically.

Put important information at the beginning and near the end.

Prompting Tips

Keep in mind the golden rule of working with LLMs:

Better input → better output.

LLMs don’t read your mind. They complete patterns. What you prompt is what you’ll receive.

A clear prompt often follows a simple structure:

Set the persona to give the model a role or mindset

Provide context with any background or constraints the model should know

Specify the task by clearly stating what you want it to do

Declare the format so it knows how the output should look

Prompt example:

You are a travel writer.

Here’s background info on Paris: I have 10 hours lay over.

List 5 must-see landmarks.

Format: bullet points with 1-sentence descriptions.To give model better understanding of the task and/or output format, you can provide examples. This is also called few-shot prompting.

Prompt example:

Please convert HTML to markdown.

Here are some examples:

Input: <h1>Header</h1>

Output: # Header

Convert this: <b>Bold Text</b>If you are asking to solve complex task that requires logic reasoning, encourage a model to think step-by-step. This technique is called Chain-of-Thought.