Sampling in Large Language Models

or How LLMs get creative

Recently, I had a technical interview with one of the few companies that build Large Language Models (LLMs). During the interview, I was asked about sampling in LLMs: the strategies that exist, why they are needed, how they work, and even to implement some of them. Thanks to the knowledge I’ve built over my career, I handled the interview confidently. Today, I’ll share everything you need to know about sampling. Whether you’re an AI engineer or an enthusiast, this overview will give you the fundamentals needed to better understand and work with these models.

You can expect to get through this issue in about 6 minutes.

What is sampling and why do we need it?

Any artificial neural network (including an LLM) is just an extremely complex mathematical formula. Which means, the output is just a product of inputs and some static matrices. In other words, given the same input, LLM produces the same output every time.

This behaviour is fine for most of the applications: for example when we want our model to predict if an email is spam, we expect the same prediction for the same email every time. But that’s not the case for LLMs, where we often want it to be more “creative” and generate different responses each time we say “Hello”.

So how come LLMs generate each time a different answer?

Sampling Strategies

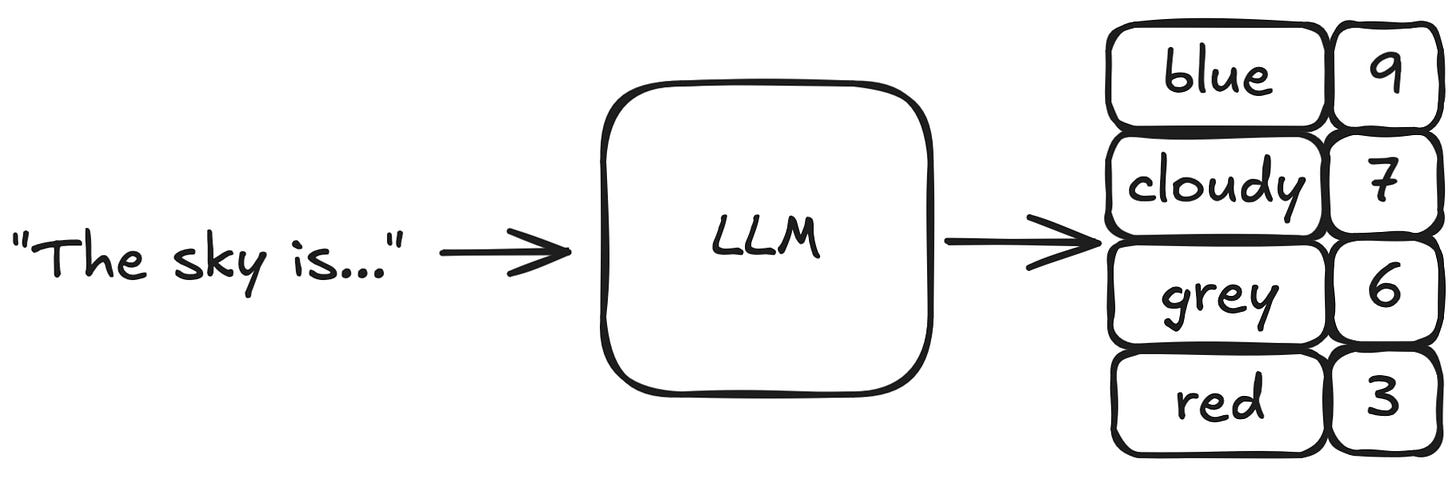

To answer this question, we first need to understand how these models work. I have a whole issue explaining how LLMs work. The key thing to understand is that at each generation step, the LLM’s final layer assigns a score to every word (token) in its vocabulary. These numbers reflect how likely the model thinks each word should come next.

At each step, an LLM predicts a logit (number) for every possible next token.

The simplest strategy is to choose the token with the highest logit. This is called “greedy decoding” and it produces the same response every time you say “Hello”.

Instead of always picking the top token, some sampling strategies also consider other options. For example, for the sentence “The sky is…” the most likely word is “blue”, but the model might also choose “cloudy”, “gray”, or even “red”. This allows LLMs respond in a more creative and engaging way.

Now that we understand the basics of sampling, let’s look at the strategies most commonly used and how they work.

Converting logits to probabilities

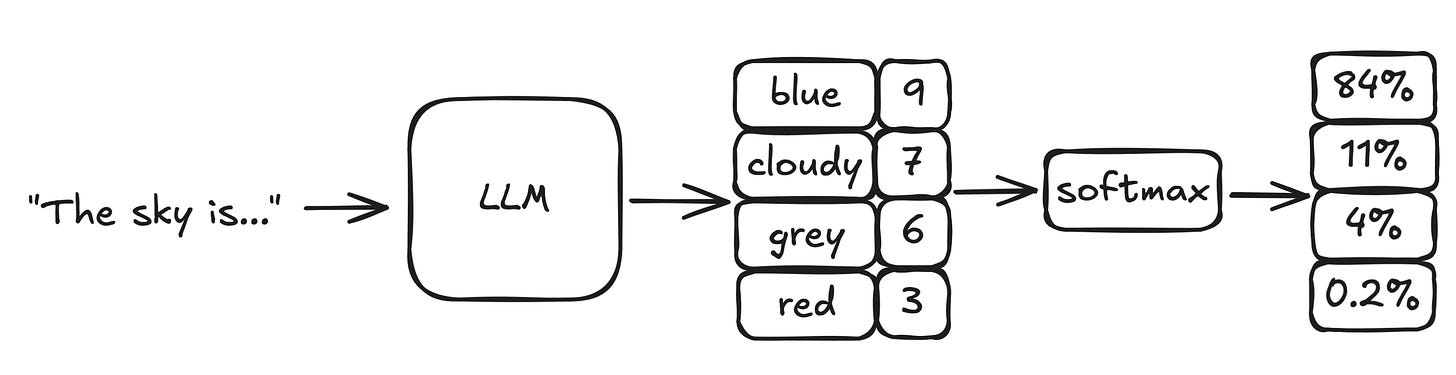

To choose tokens based on probabilities, we first need to convert the logits (raw scores) into probabilities that sum to 1.

The key mathematical formula to make sampling strategies work is softmax.

The softmax equation looks like this:

, where z_i is the logit for token i, and K is the total number of tokens in the vocabulary. This function turns the set of logits into a probability distribution: all values are between 0 and 1, and they add up to 1.

To better understand this, let’s say the model needs to complete the sentence:

The sky is…

It now has the option to choose one of 4 words: blue, cloudy, gray, or red. Each of these words has an assigned logit: 9, 7, 6, and 3, respectively. Softmax converts these logits into a set of probabilities:

blue

84.2%cloudy

11.4%gray

4.2%red

0.2%.

Now, instead of choosing word “blue” every time, we can choose one of these words according to the probability distribution. If we randomly sampled from this distribution 100 times, we’d expect to get “blue” about 84 times, “cloudy” about 11 times, etc.

Temperature

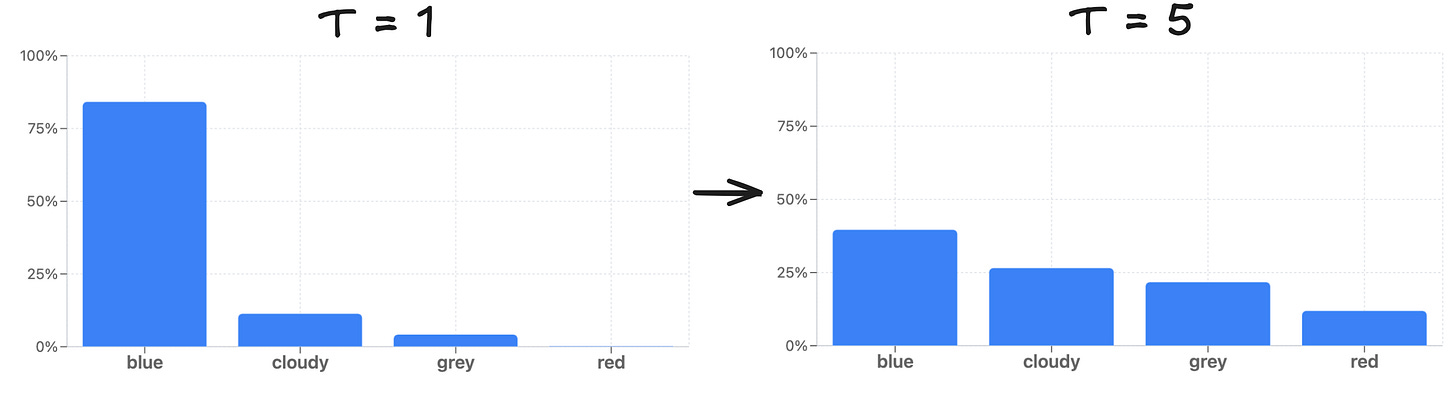

One of the most common parameters that control randomness of the output is called temperature.

In the softmax function, temperature is a constant, used to divide all logits. This makes the resulting probability distribution more “sharp” (if temperature < 1) or more “flat” (if it’s > 1). In other words, the higher the temperature is, the closer the probabilities become to each other, so the model is more likely to pick less probable tokens. Lowering the temperature has the opposite effect: it sharpens the distribution and makes the model stick to the most likely tokens.

If we set the temperature (T) to 5 and calculate probabilities for our example again, we would get:

blue

39.7%cloudy

26.6%gray

21.8%red

12.0%.

As you can see, the distribution becomes much flatter, and words that were unlikely before now have a much higher chance of being chosen.

Higher temperature makes the model’s output more diverse but also more “risky”.

It’s common to set temperature to 0 for consistent outputs. Technically, it can’t be 0 since logits can’t be divided by zero. In practice, this means the model simply does “greedy decoding”, skipping adjustment and softmax.

Try adjusting the temperature here and watch how the probability distribution shifts.

Top-K

Calculating softmax for every logit in the LLM vocabulary, which can be as large as 128,000 tokens, is computationally expensive. Instead of sampling from the entire vocabulary, the Top-K strategy considers only the tokens with the top k logits (where k is a parameter). For example, if k = 50, probabilities are calculated only for those 50 tokens instead of all 128,000.

A smaller k value makes the text more predictable but less interesting.

Top-P

As you can imagine, always sampling from the top K tokens can be suboptimal. For a yes/no question, the model should ideally choose between just two tokens: yes or no. But if you ask it to write a poem, you want a larger pool of tokens to encourage creativity.

That’s where the Top-P (Nucleus) sampling comes in. Instead of fixing K, it selects the smallest set of tokens whose probabilities add up to a threshold, usually 0.9 or 0.95. Since the probabilities of all tokens sum to 1, this subset covers the most likely ones while excluding very unlikely options.

In our earlier example, where “blue” has probability 0.84 and “cloudy” 0.11, setting P = 0.95 would limit sampling to these two tokens, since together they reach the threshold.

Top-P strategy doesn’t make sampling more efficient but it makes responses more coherent.

Stopping Condition

So we asked an LLM to complete a sentence. It generated logits for all possible next words, softmax turned them into probabilities, and a sampling strategy picked the next word. The process repeated. But when does it stop?

There are two stopping conditions:

The output hits the maximum token limit.

This is a parameter you can set. Stopping this way is not ideal, since it either cuts the response mid-sentence or produces an overly long, costly output.The LLM generates an

<end_of_sequence>token.

This is the usual and preferred condition. LLMs are trained to produce a special token when the response is complete. You can think of it like pressing “send” after finishing a message.

Constrained Sampling

Many tasks require an LLM to generate output that follows a specific grammar. For example, it might need to produce a valid SQL query or a JSON object that matches a schema. This is critical because LLM outputs are often used in applications, and even a missing bracket in JSON can break downstream steps.

Even prompt engineering won’t guarantee that the LLM will follow your instructions and stick to the right format, whether you say “please” or not. To solve this, we can constrain sampling to tokens that preserve the grammar.

Previous sampling strategies focused on weighted sampling from a subset of tokens based on K or P parameters. Constrained sampling goes even further by allowing the model to choose only tokens that keep the output valid.

This can also speed up generation. Some tokens are almost guaranteed to follow others, such as a closing bracket after an opening one. In these cases, the model can skip sampling and output the token directly.

Constrained sampling paired with greedy decoding might turn your LLM into the most powerful extraction tool.

Constrained sampling is powerful and should be in every AI engineer’s toolkit, but it has downsides. It can be hard to implement, though many providers and engines (such as vLLM) support common grammars out of the box. It has also been shown to reduce LLM performance on reasoning tasks.

What’s next?

You can experiment yourself with different sampling techniques! I’ve created a python notebook for you to explore and understand sampling from scratch.

Now you should be able to answer these questions confidently:

What is sampling in LLMs and why do we need it?

What sampling strategies exist?

What are the limitations of “greedy decoding”?

How does Top-K and Top-P strategy work?

If you have any questions, leave a comment or reach out to me on LinkedIn.