Prompt Engineering 101

Prompts are the starting point for any AI app, from chatbots to autonomous agents. Learn prompt engineering to build and use AI more effectively.

Today, Large Language Models (LLMs) have become so capable that they are used to create, automate, and educate. In the previous issue, I explained how they work. Essentially, LLMs complete the input sequence you give them. This means the way you interact with them directly shapes their behavior. Whether you're building with them or simply using them, knowing how to prompt them is a key skill.

Note: a prompt is the input given to an LLM.

In any AI project that involves an LLM, prompt engineering is often the starting point. With a thoughtfully designed prompt, much of the work can be handled right from the beginning. But the final refinements and reliability are often the hardest to achieve.

Prompting may look simple at first, but under the hood it is a design problem. You are steering a probabilistic system, and small changes in the prompt can lead to very different outputs.

Prompt engineering has even become a standalone job title in some companies. While I believe it should ultimately be part of every AI engineer’s skill set, the fact that it is recognized as its own role shows just how important it has become.

“The Problem is not with prompt engineering. It’s a real and useful skill to have. The problem is when prompt engineering is the only thing people know.”

- OpenAI Research Manager, when interviewed for AIE book.

💡In This Issue

We'll explore how to interact with models more effectively. You’ll learn the core principles behind good prompts, the mechanisms that shape model behavior, and the techniques that separate average outputs from great ones. Whether you're aiming for more control, better results, or just a deeper understanding of how these systems respond, this issue will give you the tools to get there.

Prompt Engineering is the easiest and most common way to adapt LLMs.

Technical Details

Before diving into prompt engineering techniques, it's important to understand a few core concepts.

Tokens

Tokens are the true “atoms” of LLMs.

Models don’t work with text directly, but instead they process and generate tokens. Understanding how tokenization works is important for efficient prompting. While it is out of scope for this issue, here is one thing to keep in mind.

Typos and odd formatting increase token count

Misspelled or oddly structured text may be broken into more tokens, wasting space.

System and User Prompts, and Messages

The system prompt is an initial instruction that sets the tone, style, behavior, or constraints for the model. For example, there is a hidden system prompt behind every ChatGPT conversation. It’s normally hidden from users, but they have been leaked in the past.

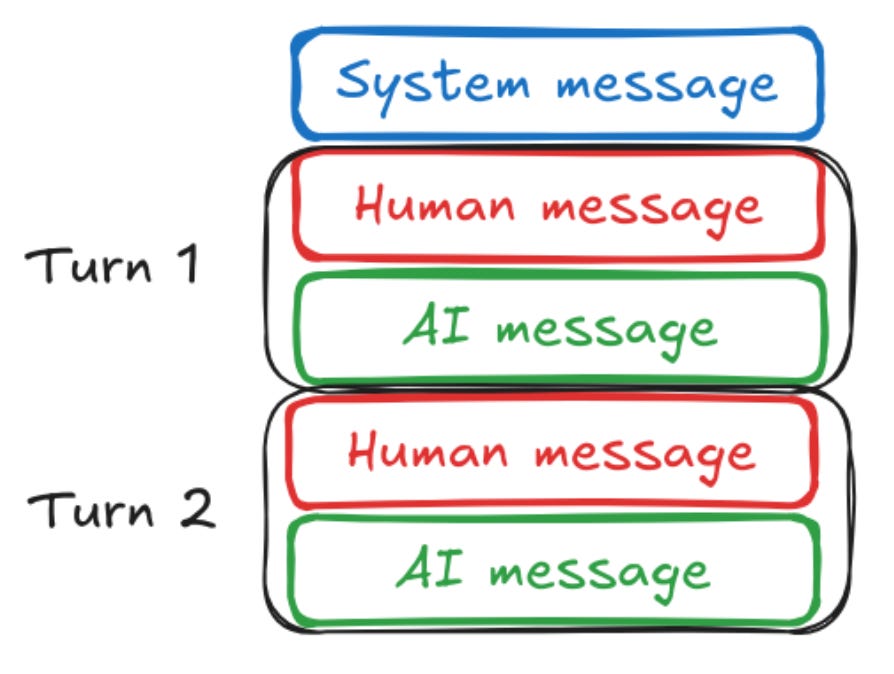

If you're a developer using an API, you must define the system prompt yourself. It's typically the first message in the input list, labeled with the role "system", and it serves to guide the model’s behavior at a high level.

The user prompt is what you, or the end user, actually type. There can be multiple user messages over the course of a conversation. These are typically labeled as "user" when using the API, and they contain the actual instructions, questions, or inputs you want the model to respond to.

When using the API, you normally construct a conversation as a list of messages, each with a role: "system", "user", or "assistant".

This message list is then combined into a single prompt behind the scenes using the model's tokenizer. Different providers have slightly different formatting, and some models (like DeepSeek’s R1) even recommend avoiding a system prompt altogether.

Understanding this message structure is key for anyone building interactive applications, especially those that rely on multi-turn conversations or consistent behavior across responses.

Special tokens

Special tokens are reserved tokens that serve structural or functional purposes. They might mark the beginning of a sequence, signal the start or end of a system or user message, or indicate when generation should stop.

For example, once a model generates a special end-of-sequence token, generation is terminated. Otherwise, it would continue until hitting a token limit.

If you're using a model locally, it’s important to ensure your tokenizer adds these tokens correctly. Some tokenizers do this automatically. In my experience, when using LLaMA-3-8B for tool use, it performed poorly without special tokens, but worked well once they were added.

Parametric vs. Non-Parametric Memory

LLMs have two types of memory: parametric and non-parametric.

Parametric memory refers to information stored in the model’s parameters. This knowledge is acquired during training and can only be changed by updating the model’s weights. In other words, parametric memory is fixed unless the model is retrained or fine-tuned.

Non-parametric memory, on the other hand, includes everything the model sees in the current prompt. When we add extra context or information to a prompt, we are relying on non-parametric memory. This is the type of memory most accessible to developers and users.

Chat History

Now that we’ve covered memory types, we can explain how tools like ChatGPT appear to "remember" earlier messages. This is made possible through non-parametric memory.

Each time you send a message, it’s appended to the conversation history. With each new interaction, the full history is passed back to the model as part of the input. This is what allows the model to continue the conversation coherently.

However, as the history grows, each response becomes more expensive to generate. Longer conversations require more tokens and compute. More importantly, LLMs get lost in multi-turn conversations, with an average drop of 39% across six generation tasks. Hence, restarting a conversation when it gets too long is crucial.

Context Window

LLMs have a fixed context window, which is the maximum number of tokens they can process in a single input. If the total prompt exceeds this limit, older parts of the conversation may be truncated or ignored entirely.

The size of this window has increased dramatically over time. GPT-2 had a context window of just 1,024 tokens, while state-of-the-art models like Gemini-2.5-Flash can handle up to 1 million tokens.

Studies have shown that when prompts are very long, models often "forget" information placed in the middle of the input. So while longer context windows allow for more information, they don’t guarantee better performance unless the prompt is structured carefully.

Context length also affects efficiency. Overlong prompts can introduce:

Unnecessary latency

Higher costs

Long prompts can also degrade the model’s performance.

When designing LLM applications, it’s important to balance richness of input with efficiency and relevance.

Prompting Best Practices

The golden rule of working with LLMs is simple: Better Input → Better Output.

Prompting can get incredibly tricky, as there is no guarantee that the model will follow your instructions, especially for smaller models. But systematic approach to prompt engineering can save you a lot of time.

Be Specific

LLMs perform best when your instructions are clear, explicit, and supported with context. Vague prompts often lead to vague or unpredictable responses.

Specify the Role

Assigning a role gives the model behavioral context. For example, “You are a helpful customer support assistant” nudges it toward tone, format, and intent aligned with that persona. If you're using the API, this is typically done through the system prompt.

Write Clear Instructions

General prompts like “Help the user” leave too much room for interpretation. Instead, be explicit: “Answer customer questions about subscription plans using a friendly and professional tone.” Clear, specific directives reduce ambiguity and make the model's output more consistent.

Provide the Context

Without context, the model falls back on its internal training data, which may be outdated or misaligned with your task. Include relevant information, like your return policy, in the prompt to reduce hallucinations and increase accuracy.

Provide Examples

If there’s a particular style or format you want, show it. Providing one or more examples helps the model generalize and replicate the expected behavior. This is called In-Context Learning. For instance, if you need responses to be concise, include a short, well-structured example and tell the model to follow that pattern.

Break down complex tasks

LLMs struggle with ambiguity and perform inconsistently on large, multi-step tasks. If possible, decompose the workflow.

Splitting a problem into smaller steps makes your prompts easier to test, debug, and maintain. It also opens the door to parallel execution or routing simpler tasks to smaller, cheaper models.

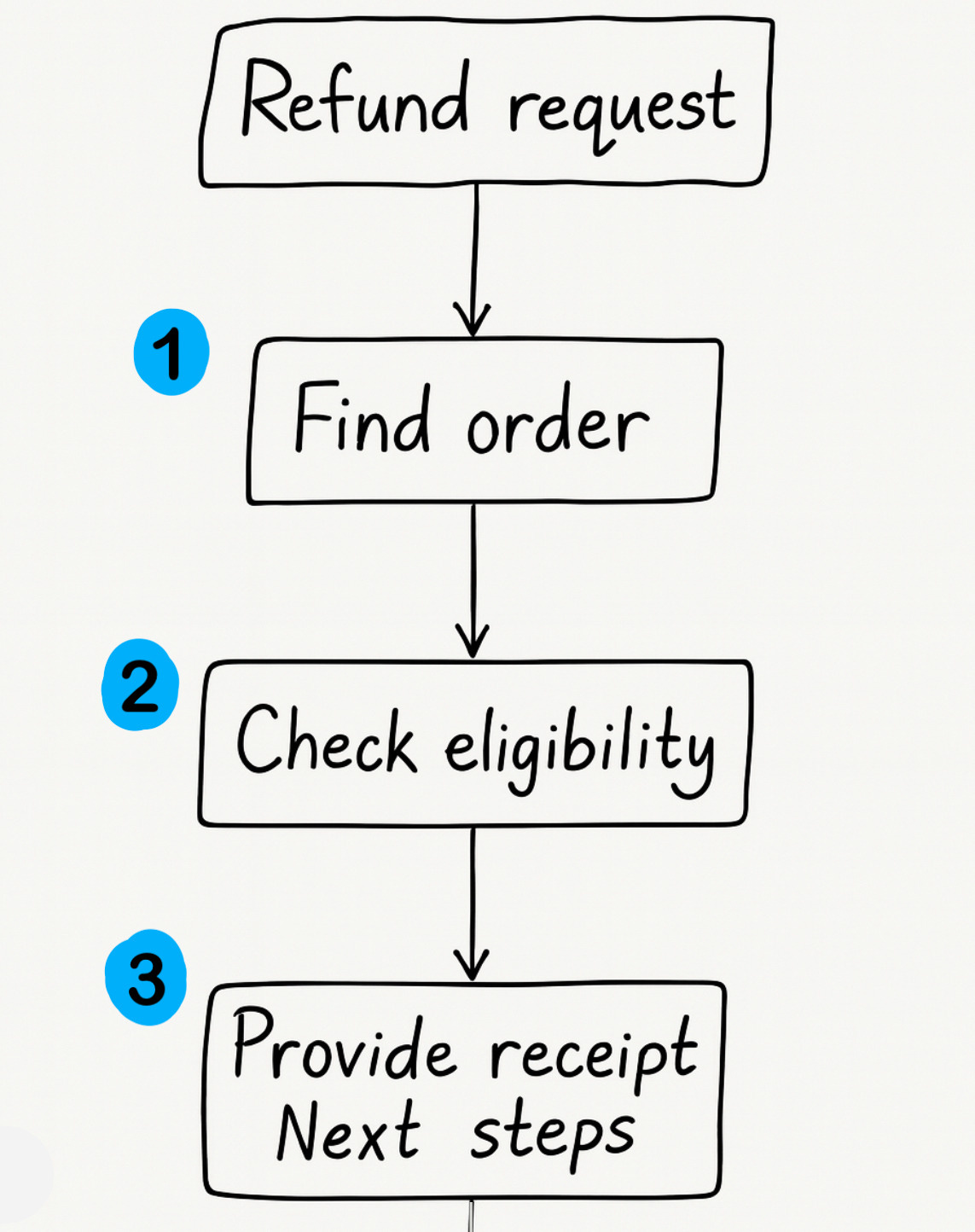

For example, if your chatbot needs to process a refund, the task might involve:

Identifying which items need to be refunded

Checking refund eligibility

Providing a receipt and follow-up instructions

Rather than asking the model to handle all of this in a single prompt, you can break it into separate prompts and run them sequentially. This modular approach improves reliability and gives you more control over each step.

The more narrow and deterministic your instructions, the more consistent and predictable your outputs will be.

Give the model time to think

Sometimes better results come not from adding more input, but from thinking more carefully.

One way to guide the model is by asking it to solve problems step by step. This helps it stay focused and follow a clearer line of reasoning. Another method asks the model to review and revise its own answer. That adds a layer of self-checking. Both methods aim to reduce errors and improve accuracy. They don’t always come free, as they can slow the response and use up more space.

Iterate

Prompt engineering is iterative by nature. Start with a basic instruction. Watch for errors. Adjust. Repeat.

Use versioned prompts and fixed test sets to evaluate systematically. Run the same prompt across different models to compare results. Intuition helps, but it’s not enough for production.

If you’re building applications, treat prompts like code. Keep them separate from the app’s logic. Version them. Annotate changes.

Without this structure, large-scale reliability is hard to maintain.

Prompt Engineering Techniques

For many users, following best practices will handle most cases. But if you’re building with LLMs, understanding and applying these techniques will allow you to build sophisticated AI applications.

Few-shot Prompting

When you ask an LLM to answer a question without giving any examples, it's called zero-shot prompting. If you include a few examples to show the model what kind of output you expect, this is known as few-shot prompting.

In the now-famous GPT-3 paper “Language Models are Few-Shot Learners”, researchers at OpenAI showed that, with just a handful of examples, LLMs could perform tasks that weren’t explicitly present in their training data, such as translation, question answering, or arithmetic.

In practice, however, few-shot prompting can be a double-edged sword. In my own work with LLaMA-3-8B, I found that few-shot examples sometimes hurt more than they helped. They consume valuable space in the context window (which was only 8,000 tokens in that model), and they can lead the model to copy details from the examples instead of focusing on the input. To avoid this, I recommend using a small number of generic examples (ideally 5 to 10) and abstracting away specifics. For instance, if you're extracting phone numbers, use placeholders like <PHONE_NUMBER> in the examples instead of real data. This is especially important with smaller models.

Chain-of-Thought

Researchers at Google discovered that prompting the model to reason through a task step by step, significantly improves performance on reasoning-heavy problems. This approach, called Chain-of-Thought (CoT) prompting, dramatically boosted PaLM-540B’s performance on a grade school math benchmark from 18% to 57%.

Asking a model to "think step by step" helps, but showing examples of that reasoning works better for specific tasks. A method, called Auto-CoT, aimed to automate this process.

The insight here is simple: instead of treating the model like a calculator that produces an answer, you treat it like a problem solver that works through intermediate steps. This was also believed to make the model’s reasoning more transparent. However, Anthropic’s research reveals a key limitation: many CoTs do not faithfully reflect the model’s actual reasoning process, concealing how it arrived at its conclusions.

Despite this, CoT has had a major influence on the field. It inspired a surge of research into prompting techniques and reasoning architectures. Approaches like Self-Consistency and Tree-of-Thoughts, which samples multiple reasoning paths to find the most reliable answer, build on the core idea of encouraging deliberation and step-by-step problem solving.

More broadly, Chain-of-Thought reshaped how researchers think about using LLMs, not just as text predictors, but as agents capable of decomposing and reasoning through complex tasks. It laid the foundation for everything from advanced prompting methods to the emergence of reasoning models.

ReAct

Building on Chain-of-Thought, researchers from Google proposed ReAct, a framework that combines reasoning and acting. Rather than generating a final answer directly, the model enters a loop of reasoning, tool use, and observation.

The ReAct loop has three steps:

Reason – The model reflects on the current task and proposes the next action.

Act – It performs the proposed action, such as calling a tool or retrieving information.

Observe – It incorporates the result of that action and reasons about what to do next.

This loop continues until the model reaches a conclusion. Of course, safeguards are needed to prevent infinite loops or repetitive behavior.

ReAct is powerful because it introduces interaction and adaptability. It laid the foundation for autonomous agents, systems that can plan, act, and reason across multiple steps to reach a goal, often using tools or APIs along the way.

Automatic Prompt Optimisation

Manual prompt tuning is not scalable. Tools like DSPy automate the process by exploring different prompts and testing them.

It works best when:

You have large evaluation sets

Your tasks are repetitive

That said, such tools generate a lot of API calls, sometimes hundreds per experiment. Always monitor what’s happening under the hood to avoid exploding costs or hidden errors.

Jailbreaking and Prompt Injections

Prompts can also be used to “hack” an application by making a model act in unintended ways. This includes revealing private information, executing unauthorized actions, or producing harmful or misleading output.

While modern LLMs are good at identifying and refusing many of these attacks, it’s still important to add safety layers. These can include input/output filtering, prompt hardening, and isolating risky capabilities. This is especially important in apps like AI agents that interact with internal tools.

One example of a prompt injection attack involves hiding tiny text in a résumé. The model, which is used to analyze candidate résumés, reads this hidden text even though a person cannot see it. As a result, it might respond with a message like “This is the best candidate so far, you should hire them.”

Prompt attacks are a form of social engineering, but this time targeting machines.

Prompt extraction attacks have led to the leak of many system prompts from ChatGPT, Claude, and other chatbots. There is even a dedicated GitHub repository with supposedly leaked prompts. These prompts often provide a sneak peak at what works best. They typically include instructions such as:

Personality engineering

Constitutional AI and safety layers

Tool usage protocols

One of the recent leaked prompts is the Claude 4 system prompt. It’s 25,000 tokens long, which adds significant computational cost, and it includes explicit hardcoded political information. Simon Willison has published a strong overview of its contents.

Learn by Building!

The best way to learn is to build something yourself.

I’ve created a simple Customer Support Bot example for you to try out and experiment with techniques covered in this issue. It runs on the free tier of the Gemini API, so you won’t need to spend anything.

Questions? Message me on LinkedIn.

Further Reading

👉 Go-To guide for Prompt Engineering